Stop Talking About 'Finding The Next Paul Christiano'

Epistemic status: trying to articulate a feeling, quickly dashing off a post about it (20 minutes writing).

In longtermist AI field-building conversations, you’ll sometimes (often?) hear the goal of getting talented people to work on AI alignment as phrased as “finding the next Paul Christiano.”1

I think that this and similar phrases should be retired.

The basic thought motivating this language is that progress on the alignment problem will mostly be driven by a handful of extraordinarily productive researchers. Is this model of solving the alignment problem helpful? And, supposing that it is, is speaking about it in this way helpful?

I’ll set aside the first question regarding the correspondence between the model and reality because I have no particular insight on it (though I think it’s very important, and ultimately suspect that the model is implausible).

But I think that the framing is unhelpful, even if the model is ultimately correct. A few reasons against:

-

Identifying a specific individual as emblematic of the type of person you’re looking for contributes to groupthink and hero-worship. Even when the speakers themselves don’t hold this view, their interlocutors (especially newer ones) will infer that Christiano is a uniquely brilliant researcher to hold in high-esteem and a figure to which one ought defer. It seems generally counterproductive for a field aimed at unsolved problems to enshrine particular views on the basis of their messenger.

-

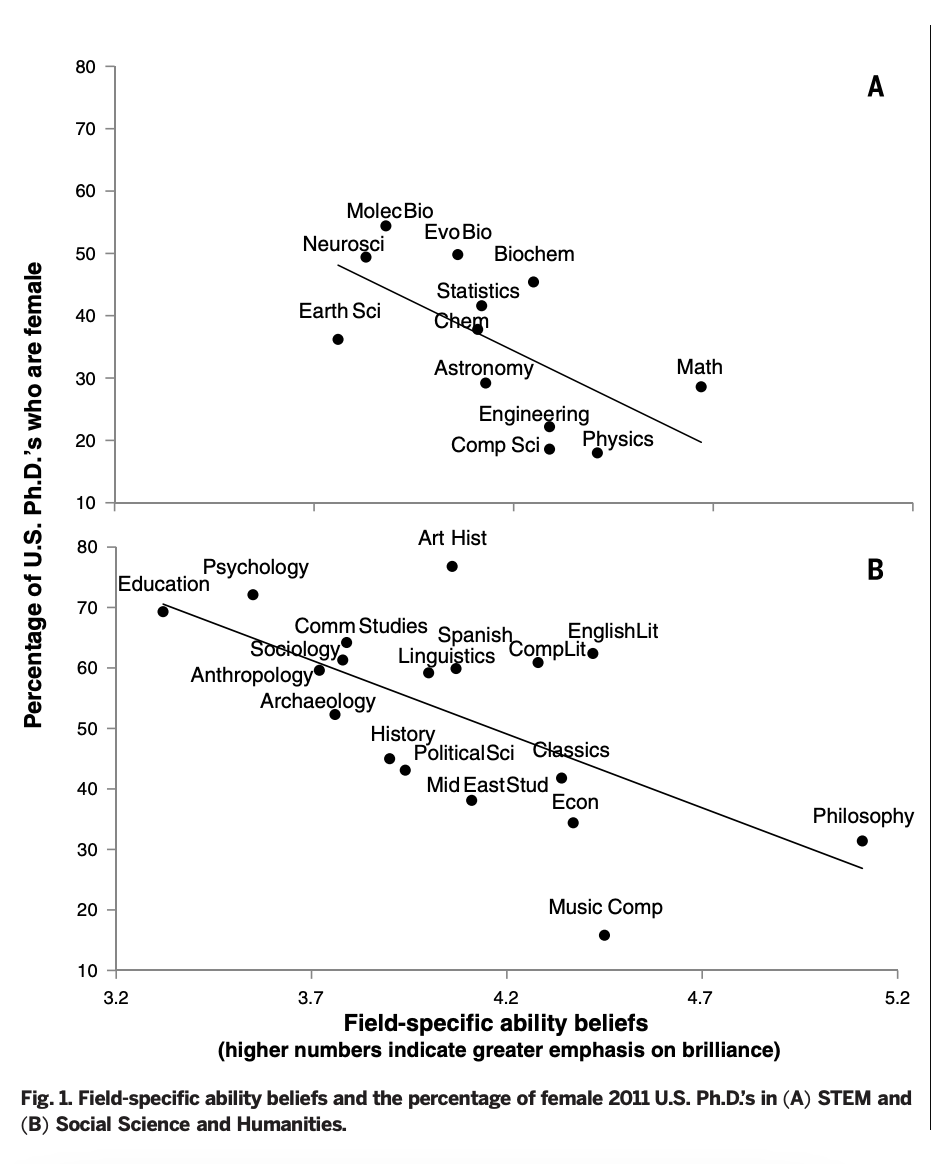

There’s some (correlational) evidence suggesting that the degree to which fields emphasize individual brilliance tracks pretty closely with women’s underrepresentation in those fields.23 Even if it’s the case that the underlying model is roughly right (“a few brilliant individuals will solve the problem”), it seems likely that emphasizing individual brilliance selects not for actual competence but instead for overconfidence. For prospective contributors to the field, hearing that these field-builders are looking for uniquely talented and productive researchers could easily cause one to doubt their own ability to contribute.

Unless one thinks that potential to work on the alignment problem is for some reason strongly correlated with confidence in one’s own brilliance, this seems likely to push away talent that could be immensely helpful.

I don’t really see countervailing reasons in favor this phrasing, either.

Endnotes

-

I’m not very active in these circles and so don’t really know how often this is said. Over the course of a week-long AI-risk workshop plus a summer in Berkeley, I heard it at least a dozen times. ↩

-

Leslie, Sarah-Jane, Andrei Cimpian, Meredith Meyer, and Edward Freeland. “Expectations of Brilliance Underlie Gender Distributions across Academic Disciplines.” Science 347, no. 6219 (January 16, 2015): 262–65. https://doi.org/10.1126/science.1261375. ↩

-

I haven’t looked into the methodology behind this result and so won’t stake too much on the findings. ↩